NVIDIA said it has achieved a record large language model (LLM) inference speed, announcing that an NVIDIA DGX B200 node with eight NVIDIA Blackwell GPUs achieved more than 1,000 tokens per second (TPS) per user on the 400-billion-parameter Llama 4 Maverick model.

NVIDIA said the model is the largest and most powerful in the Llama 4 collection and that the speed was independently measured by the AI benchmarking service Artificial Analysis.

NVIDIA added that Blackwell reaches 72,000 TPS/server at their highest throughput configuration.

The company said it made software optimizations using TensorRT-LLM and trained a speculative decoding draft model using EAGLE-3 techniques. Combining these approaches, NVIDIA has achieved a 4x speed-up relative to the best prior Blackwell baseline, NVIDIA said.

“The optimizations described below significantly increase performance while preserving response accuracy,” NVIDIA said in a blog posted yesterday. “We leveraged FP8 data types for GEMMs, Mixture of Experts (MoE), and Attention operations to reduce the model size and make use of the high FP8 throughput possible with Blackwell Tensor Core technology. Accuracy when using the FP8 data format matches that of Artificial Analysis BF16 across many metrics….”Most generative AI application contexts require a balance of throughput and latency, ensuring that many customers can simultaneously enjoy a “good enough” experience. However, for critical applications that must make important decisions at speed, minimizing latency for a single client becomes paramount. As the TPS/user record shows, Blackwell hardware is the best choice for any task—whether you need to maximize throughput, balance throughput and latency, or minimize latency for a single user (the focus of this post).

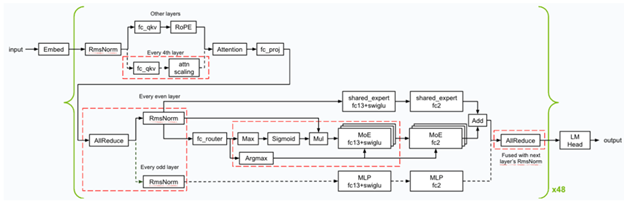

Below is an overview of the kernel optimizations and fusions (denoted in red-dashed squares) NVIDIA applied during the inference. NVIDIA implemented several low-latency GEMM kernels, and applied various kernel fusions (like FC13 + SwiGLU, FC_QKV + attn_scaling and AllReduce + RMSnorm) to make sure Blackwell excels at the minimum latency scenario.

Overview of the kernel optimizations & fusions used for Llama 4 Maverick

NVIDIA optimized the CUDA kernels for GEMMs, MoE, and Attention operations to achieve the best performance on the Blackwell GPUs.

- Utilized spatial partitioning (also known as warp specialization) and designed the GEMM kernels to load data from memory in an efficient manner to maximize utilization of the enormous memory bandwidth that the NVIDIA DGX system offers—64TB/s HBM3e bandwidth in total.

- Shuffled the GEMM weight in a swizzled format to allow better layout when loading the computation result from Tensor Memory after the matrix multiplication computations using Blackwell’s fifth-generation Tensor Cores.

- Optimized the performance of the attention kernels by dividing the computations along the sequence length dimension of the K and V tensors, allowing computations to run in parallel across multiple CUDA thread blocks. In addition, NVIDIA utilized distributed shared memory to efficiently reduce results across the thread blocks in the same thread block cluster without the need to access the global memory.

The remainder of the blog can be found here.