AMD issued a raft of news at their Advancing AI 2025 event this week, an update on the company’s response to NVIDIA’s 90-plus percent market share dominance in the GPU and AI markets. And the company offered a sneak peak at what to expect from their next generation of EPYC CPUs and Instinct GPUs.

Here’s an overview of AMD’s major announcement:

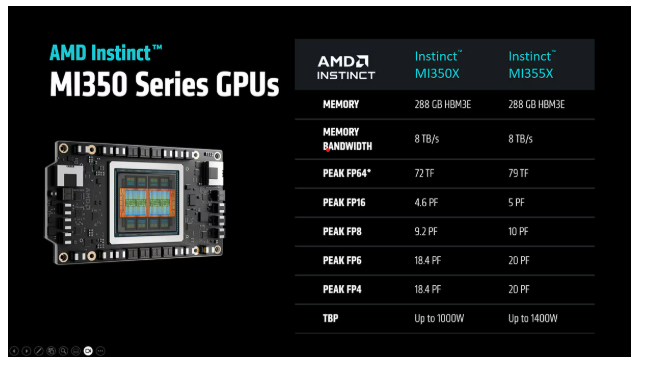

AMD MI350 Series GPUs

The headline announcement: AMD launched the Instinct MI350 Series that they said delivers up to 4x generation-on-generation AI compute improvement and up to a 35x leap in inferencing performance.

They offer memory capacity of 288GB HBM3E and bandwidth of up to 8TB/s along with air-cooled and direct liquid-cooled configurations.

And they support up to 64 GPUs in an air-cooled rack and up to 128 GPUs in a direct liquid-cooled racks delivering up to 2.6 exaFLOPS of FP4/FP6 performance in an industry standards-based infrastructure.

AMD CEO Lisa Su

“With the MI350 series, we’re delivering the largest generational performance leap in the history of Instinct, and we’re already deep in development of MI400 for 2026.” AMD CEO, Dr. Lisa Su, said. “[The MI400] is really designed from the ground up as a rack-level solution.”

On that front, AMD announced its “Helios” rack scale architecture, available next year, that will integrate a combination of the next generation of AMD technology including:

- Next-Gen AMD Instinct MI400 Series GPUs, which are expected to offer up to 432 GB of HBM4 memory, 40 petaflops of FP4 performance and 300 gigabytes per second of scale-out bandwidth3.

- Helios performance scales across 72 GPUs using the open standard UALink (Ultra Accelerator Link)to interconnect the GPUs and scale-out NICs. This is designed to let every GPU in the rack communicate as one unified system.

- 6th Gen AMD EPYC “Venice” CPUs, which will utilize the “Zen 6” architecture and are expected to offer up to 256 cores, up to 1.7X the performance and 1.6 TBs of memory bandwidth.

- AMD Pensando “Vulcano” AI NICs, which is UEC (Ultra Ethernet Consortium) 1.0 compliant and supports both PCIe and UALink interfaces for connectivity to CPUs and GPUs. It will also support 800G network throughput and an expected 8x the scale-out bandwidth per GPU3 compared to the previous generation.

ROCm 7 and Developer Cloud

A major area of advantage for NVIDIA is its dominance of the software development arena – the vast majority of AI application developers use NVIDIA’s CUDA programming platform. Developers who become adept at using CUDA tend to continue using… CUDA. Users of applications built on CUDA tend to want… serves using NVIDIA GPUs. Along with GPU performance, competing with NVIDIA on the AI software front is a major challenge for anyone trying to carve out a substantial share of the AI market.

This week, AMD introduced AMD ROCm 7 and the AMD Developer Cloud under what the company called a “developers first” mantra.

“Over the past year, we have shifted our focus to enhancing our inference and training capabilities across key models and frameworks and expanding our customer base,” said Anush Elangovan, VP of AI Software, AMD, in an announcement blog. “Leading models like llama 4, gemma 3, and Deepseek are now supported from day one, and our collaboration with the open-source community has never been stronger, underscoring our dedication to fostering an accessible and innovative AI ecosystem.”

Elangovan emphasized ROCm 7’s accessibility and scalability, including “putting MI300X-class GPUs in the hands of anyone with a GitHub ID…, installing ROCm with a simple pip install…, going from zero to Triton kernel notebook in minutes.”

Generally available in Q3 2025, ROCm 7 will deliver more than 3.5X the inference capability and 3X the training power compared to ROCm 6. This stems from advances in usability, performance, and support for lower precision data types like FP4 and FP6, Elangovan said. ROCm 7 also offers “a robust approach” to distributed inference, the result of collaboration with the open-source ecosystem, including such frameworks as SGLang, vLLM and llm-d.

AMD’s ROCm Enterprise AI debuts as an MLOps platform designed for AI operations in enterprise settings and includes tools for model tuning with industry-specific data and integration with structured and unstructured workflows. AMD said this is facilitated by partnerships “within our ecosystem for developing reference applications like chatbots and document summarizations.”

Rack-Scale Energy Efficiency

For the pressing problem of AI energy demand outstripping energy supply, AMD said it exceeded its “30×25” efficiency goal, achieving a 38x increase in node-level energy efficiency for AI-training and HPC, which the company said equates to a 97 percent reduction in energy for the same performance compared to systems from five years ago.

The company also set a 2030 goal to deliver a 20x increase in rack-scale energy efficiency from a 2024 base year, enabling a typical AI model that today requires more than 275 racks to be trained in under one rack by 2030, using 95 percent less electricity.

The company also set a 2030 goal to deliver a 20x increase in rack-scale energy efficiency from a 2024 base year, enabling a typical AI model that today requires more than 275 racks to be trained in under one rack by 2030, using 95 percent less electricity.

Combined with expected software and algorithmic advances, AMD said the new goal could enable up to a 100x improvement in overall energy efficiency.

Open Rack Scale AI Infrastructure

AMD announced its rack architecture for AI encompassing its 5th Gen EPYC CPUs, Instinct MI350 Series GPUs, and scale-out networking solutions including AMD Pensando Pollara AI NIC, integrated into an industry-standard Open Compute Project- and Ultra Ethernet Consortium-compliant design.

“By combining all our hardware components into a single rack solution, we are enabling a new class of differentiated, high-performance AI infrastructure in both liquid and air-cooled configurations,” AMD said.