Google today introduced its seventh-generation Tensor Processing Unit, “Ironwood,” which the company said is it most performant and scalable custom AI accelerator and the first designed specifically for inference.

Google today introduced its seventh-generation Tensor Processing Unit, “Ironwood,” which the company said is it most performant and scalable custom AI accelerator and the first designed specifically for inference.

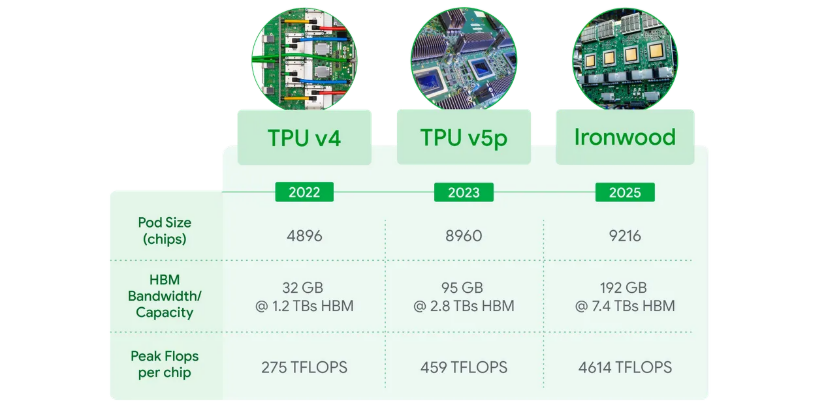

Ironwood scales up to 9,216 liquid cooled chips linked via Inter-Chip Interconnect (ICI) networking spanning nearly 10 MW. It is a new components of Google Cloud AI Hypercomputer architecture, built to optimize hardware and software together for AI workloads, according to the company. Ironwood lets developers leverage Google’s Pathways software stack to harness tens of thousands of Ironwood TPUs.

Ironwood represents a shift from responsive AI models, which provide real-time information for people to interpret, to models that provide the proactive generation of insights and interpretation, according to Google.

“This is what we call the “age of inference” where AI agents will proactively retrieve and generate data to collaboratively deliver insights and answers, not just data,” they said.

Ironwood is designed to manage the omputation and communication demands of “thinking models,” encompassing large language models, Mixture of Experts (MoEs) and advanced reasoning tasks, which require massive parallel processing and efficient memory access. Google said Ironwood is designed to minimize data movement and latency on chip while carrying out massive tensor manipulations.

“At the frontier, the computation demands of thinking models extend well beyond the capacity of any single chip,” they said. “We designed Ironwood TPUs with a low-latency, high bandwidth ICI network to support coordinated, synchronous communication at full TPU pod scale.”

Ironwood comes in two sizes based on AI workload demands: a 256 chip configuration and a 9,216 chip configuration.

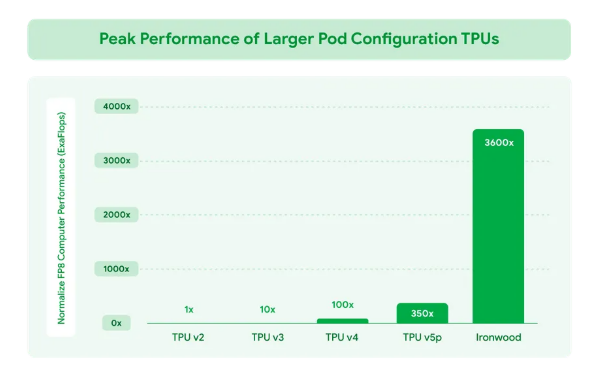

- When scaled to 9,216 chips per pod for a total of 42.5 exaflops, Ironwood supports more than 24x the compute power of the world’s no. 1 supercomputer on the Top500 list – El Capitan, at 1.7 exaflops per pod, Google said. Each Ironwood chip has peak compute of 4,614 TFLOPs. “This represents a monumental leap in AI capability. Ironwood’s memory and network architecture ensures that the right data is always available to support peak performance at this massive scale,” they said.

- Ironwood also features SparseCore, a specialized accelerator for processing ultra-large embeddings common in advanced ranking and recommendation workloads. Expanded SparseCore support in Ironwood allows for a wider range of workloads to be accelerated, including moving beyond the traditional AI domain to financial and scientific domains.

- Pathways, Google’s ML runtime developed by Google DeepMind, enables distributed computing across multiple TPU chips. Pathways on Google is designed to make moving beyond a single Ironwood Pod straightforward, enabling hundreds of thousands of Ironwood chips to be composed together for AI computation.

Features include:

Features include:

- Ironwood perf/watt is 2x relative to Trillium, our sixth generation TPU announced last year. At a time when available power is one of the constraints for delivering AI capabilities, we deliver significantly more capacity per watt for customer workloads. Our advanced liquid cooling solutions and optimized chip design can reliably sustain up to twice the performance of standard air cooling even under continuous, heavy AI workloads. In fact, Ironwood is nearly 30x more power efficient than the company’s first cloud TPU from 2018.

- Ironwood offers 192 GB per chip, 6x that of Trillium, designed to enable processing of larger models and datasets, reducing data transfers and improving performance.

- Improved HBM bandwidth, reaching 7.2 TBps per chip, 4.5x of Trillium’s. This ensures rapid data access, crucial for memory-intensive workloads common in modern AI.

- Enhanced Inter-Chip Interconnect (ICI) bandwidth has been increased to 1.2 Tbps bidirectional, 1.5x of Trillium’s, enabling faster communication between chips, facilitating efficient distributed training and inference at scale.